CLaMP: Contrastive Language-Music Pre-training for Cross-Modal Symbolic Music Information Retrieval [ISMIR 2023, Best Student Paper Award]

Paper Code WikiMusicText Dataset

Authors

- Shangda Wu (Central Conservatory of Music) shangda@mail.ccom.edu.cn

- Dingyao Yu (Microsoft Research Asia) v-dingyaoyu@microsoft.com

- Xu Tan (Microsoft Research Asia) xuta@microsoft.com

- Maosong Sun^ (Central Conservatory of Music, Tsinghua University) sms@tsinghua.edu.cn

^ Corresponding author.

The intellectual property of the CLaMP project is owned by the Central Conservatory of Music.

Abstract

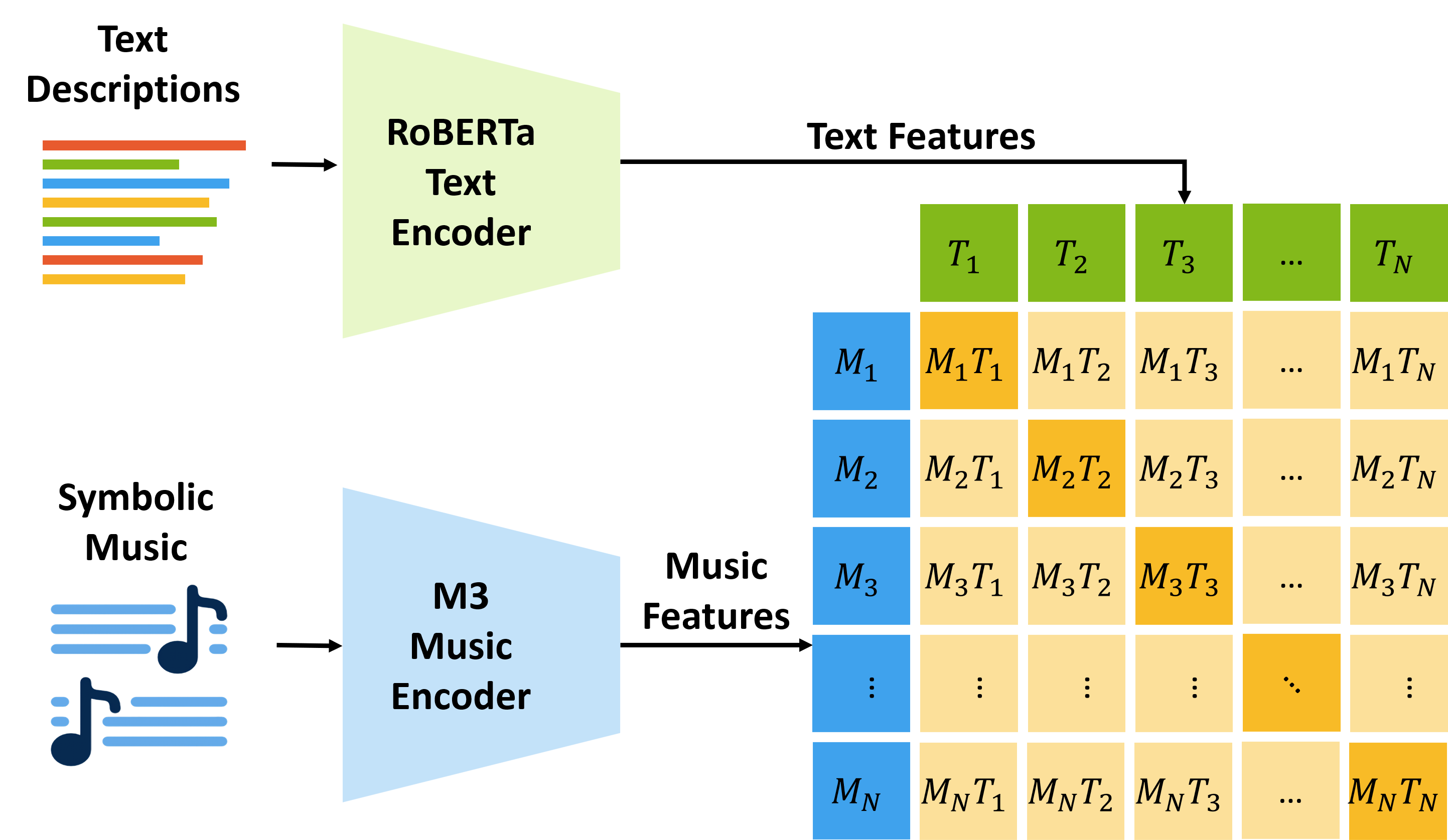

In "CLaMP: Contrastive Language-Music Pre-training for Cross-Modal Symbolic Music Information Retrieval", we introduce a solution for cross-modal symbolic MIR that utilizes contrastive learning and pre-training. The proposed approach, CLaMP: Contrastive Language-Music Pre-training, which learns cross-modal representations between natural language and symbolic music using a music encoder and a text encoder trained jointly with a contrastive loss. To pre-train CLaMP, we collected a large dataset of 1.4 million music-text pairs. It employed text dropout as a data augmentation technique and bar patching to efficiently represent music data which reduces sequence length to less than 10%. In addition, we developed a masked music model pre-training objective to enhance the music encoder's comprehension of musical context and structure. CLaMP integrates textual information to enable semantic search and zero-shot classification for symbolic music, surpassing the capabilities of previous models. To support the evaluation of semantic search and music classification, we publicly release WikiMusicText (WikiMT), a dataset of 1010 lead sheets in ABC notation, each accompanied by a title, artist, genre, and description. In comparison to state-of-the-art models that require fine-tuning, zero-shot CLaMP demonstrated comparable or superior performance on score-oriented datasets.

Figure 1: The architecture of CLaMP, including two encoders - one for music and one for text - trained jointly with a contrastive loss to learn cross-modal representations.

Cross-Modal Symbolic MIR

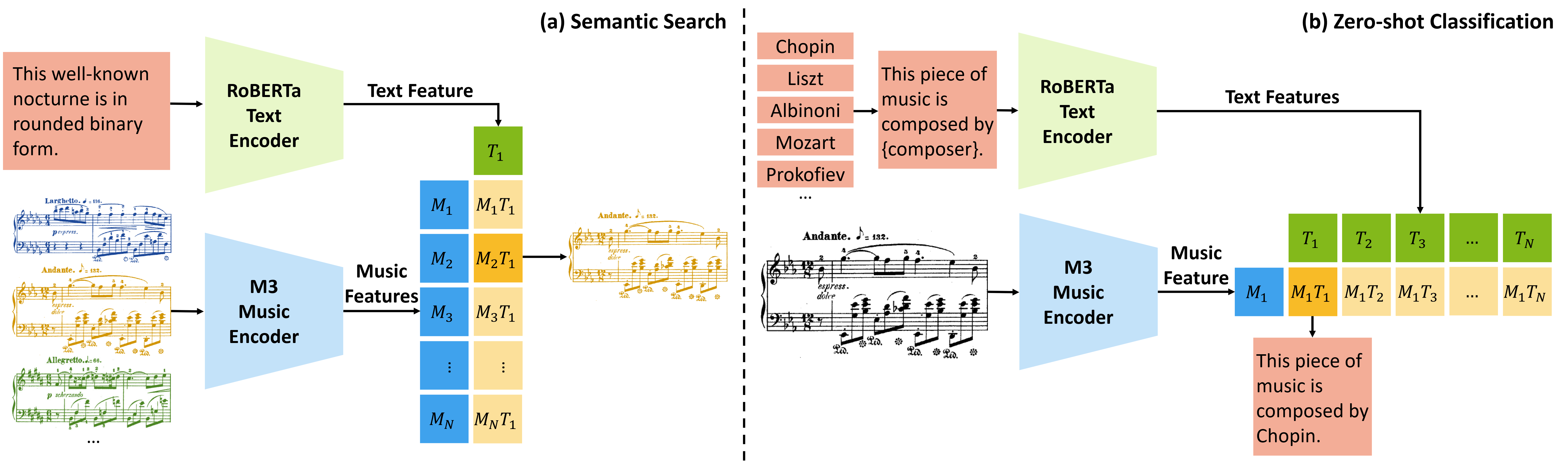

CLaMP is capable of aligning symbolic music and natural language, which can be used for various cross-modal retrieval tasks, including semantic search and zero-shot classification for symbolic music.

Figure 2: The processes of CLaMP performing cross-modal symbolic MIR tasks, including semantic search and zero-shot classification for symbolic music, without requiring task-specific training data.

Semantic search is a technique for retrieving music by open-domain queries, which differs from traditional keyword-based searches that depend on exact matches or meta-information. This involves two steps: 1) extracting music features from all scores in the library, and 2) transforming the query into a text feature. By calculating the similarities between the text feature and the music features, it can efficiently locate the score that best matches the user's query in the library.

Zero-shot classification refers to the classification of new items into any desired label without the need for training data. It involves using a prompt template to provide context for the text encoder. For example, a prompt such as "This piece of music is composed by {composer}." is utilized to form input texts based on the names of candidate composers. The text encoder then outputs text features based on these input texts. Meanwhile, the music encoder extracts the music feature from the unlabelled target symbolic music. By calculating the similarity between each candidate text feature and the target music feature, the label with the highest similarity is chosen as the predicted one.

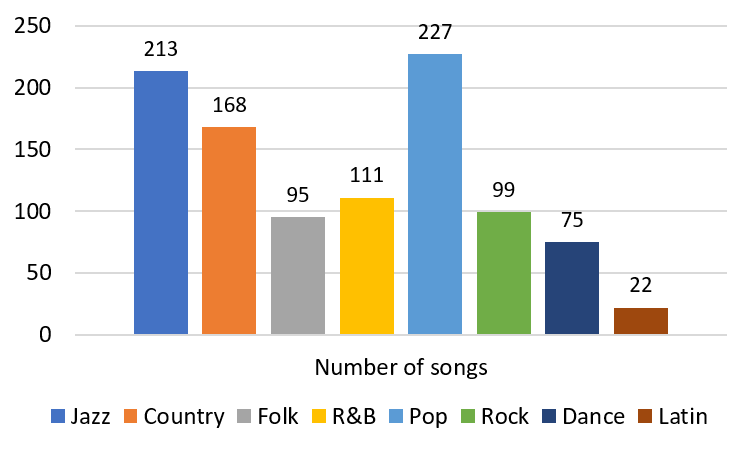

WikiMusicText Dataset

We introduce WikiMusicText (WikiMT), a new dataset for the evaluation of semantic search and music classification. It includes 1010 lead sheets in ABC notation sourced from Wikifonia.org, each accompanied by a title, artist, genre, and description. The title and artist information is extracted from the score, whereas the genre labels are obtained by matching keywords from the Wikipedia entries and assigned to one of the 8 classes that loosely mimic the GTZAN genres. The description is obtained by utilizing BART-large to summarize and clean the corresponding Wikipedia entry. Additionally, the natural language information within the ABC notation is removed.

Applications

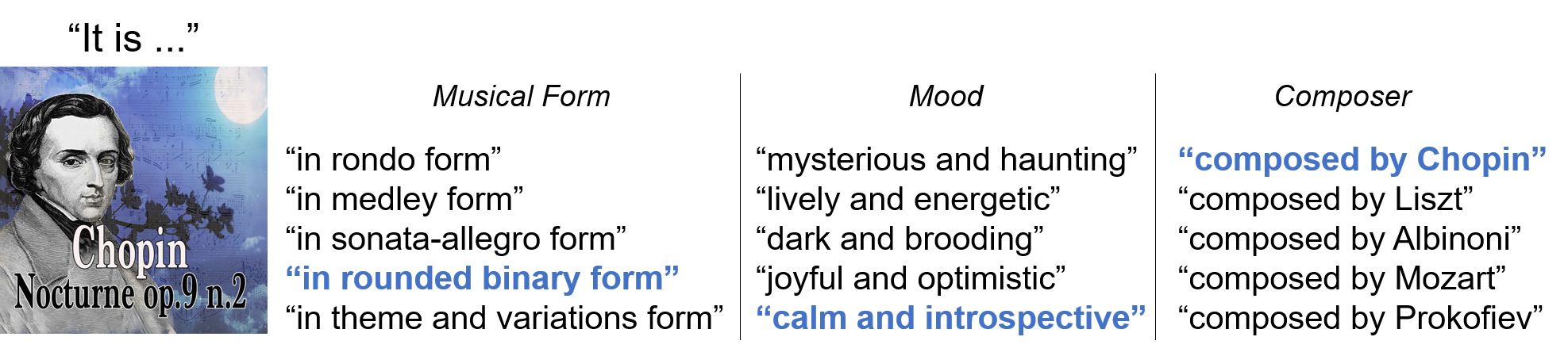

Zero-shot Music Classification (Music -> Text)

Here we use an example to illustrate the ability of CLaMP to perform zero-shot classification for symbolic music. The piece of music is Nocturne op. 9 no. 2 by Chopin. We designed several prompts to provide context for the text encoder, including the form of the piece, the mood, and the composer of the piece. For each attribute, we provide five possible choices. The results show that CLaMP can correctly classify the piece of music into the correct prompt for each attribute.

Nocturne op. 9 no. 2 (MusicXML, YouTube)

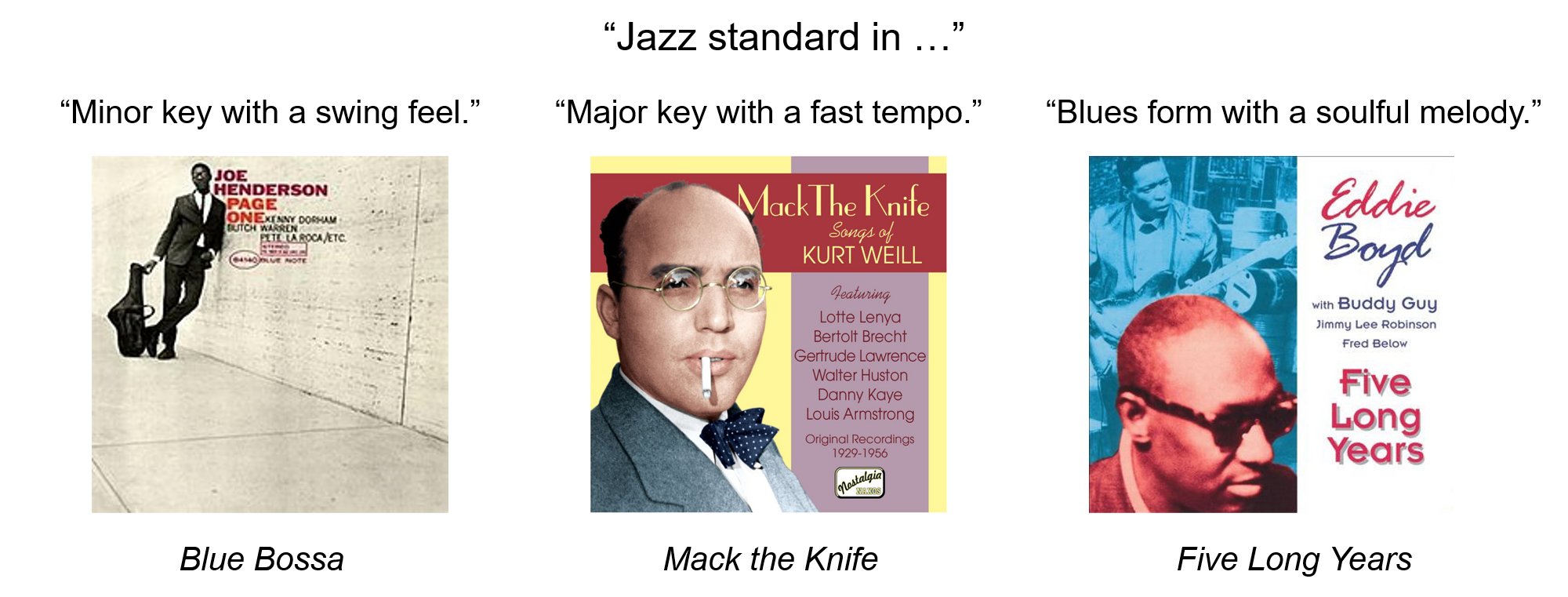

Semantic Music Search (Text -> Music)

We also demonstrate the ability of CLaMP to perform semantic search for symbolic music. We build a simple music search engine based on CLaMP. The user can input a query in natural language, and show the top 1 result from WikiMT (1010 pieces of music). CLaMP can retrieve precise songs given detailed description of the music, such as the genre, key, tempo, and form. For example, the user can input "Jazz standard in Minor key with a swing feel.", and the search engine will return the song "Blue Bossa" by Kenny Dorham.

Blue Bossa (MusicXML, YouTube); Mack the Knife (MusicXML, YouTube); Five Long Years (MusicXML, YouTube)

Similar Music Recommendation (Music -> Music)

A surprising result of CLaMP is that it can also recommend similar music given a piece of music, even though it is not trained on this task. We only use the music encoder to extract the music feature from the music query, and then calculate the similarity between the query and all the pieces of music in the library. For example, the user can input "We Are the Champions" by Queen, and CLaMP can recommend the top 3 similar songs, including "I Believe I Can Fly" by R. Kelly, "Hold the Line" by Toto, and "My Immortal" by Evanescence from WikiMT.

We Are the Champions (MusicXML, YouTube); I Believe I Can Fly (MusicXML, YouTube); Hold the Line (MusicXML, YouTube); My Immortal (MusicXML, YouTube)

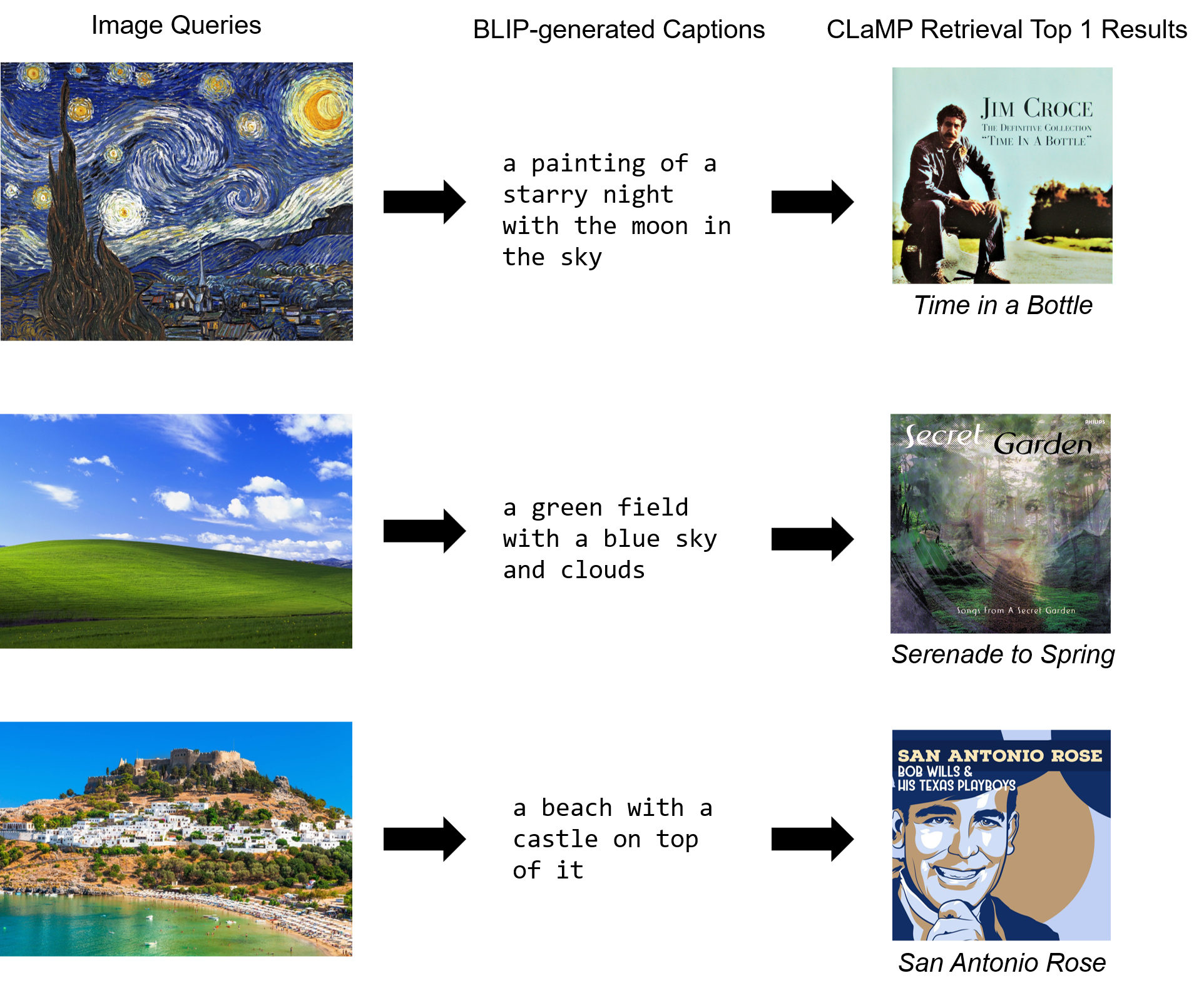

Image-based Music Recommendation (Image -> Text -> Music)

Finally, we demonstrate the ability of CLaMP to perform image-based music recommendation. We use the BLIP model to generate captions for images, and then use CLaMP to retrieve the top 1 result from WikiMT based on the generated captions. For example, the user can input a painting of a starry night with the moon in the sky, and CLaMP can recommend the song "Time in a Bottle" by Jim Croce. This ability has the potential to be used in a wide range of applications, such as music recommendation for movies, games, and advertisements.

Time in a Bottle (MusicXML, YouTube); Serenade to Spring (MusicXML, YouTube); San Antonio Rose (MusicXML, YouTube)