MuseCoco: Generating Symbolic Music from Text

Abstract

Generating music from text descriptions is a user-friendly mode since the text is a relatively easy interface for user engagement. While some approaches utilize texts to control music audio generation, editing musical elements in generated audio is challenging for users. In contrast, symbolic music offers ease of editing, making it more accessible for users to manipulate specific musical elements. In this paper, we propose MuseCoco, which generates symbolic music from text descriptions with musical attributes as the bridge to break down the task into text-to-attribute understanding and attribute-to-music generation stages. MuseCoCo stands for Music Composition Copilot that empowers musicians to generate music directly from given text descriptions, offering a significant improvement in efficiency compared to creating music entirely from scratch. The system has two main advantages: Firstly, it is data efficient. In the attribute-to-music generation stage, the attributes can be directly extracted from music sequences, making the model training self-supervised. In the text-to-attribute understanding stage, the text is synthesized and refined by ChatGPT based on the defined attribute templates. Secondly, the system can achieve precise control with specific attributes in text descriptions and offers multiple control options through attribute-conditioned or text-conditioned approaches. MuseCoco outperforms baseline systems in terms of musicality, controllability, and overall score by at least 1.27, 1.08, and 1.32 respectively. Besides, there is a notable enhancement of about 20% in objective control accuracy. In addition, we have developed a robust large-scale model with 1.2 billion parameters, showcasing exceptional controllability and musicality.

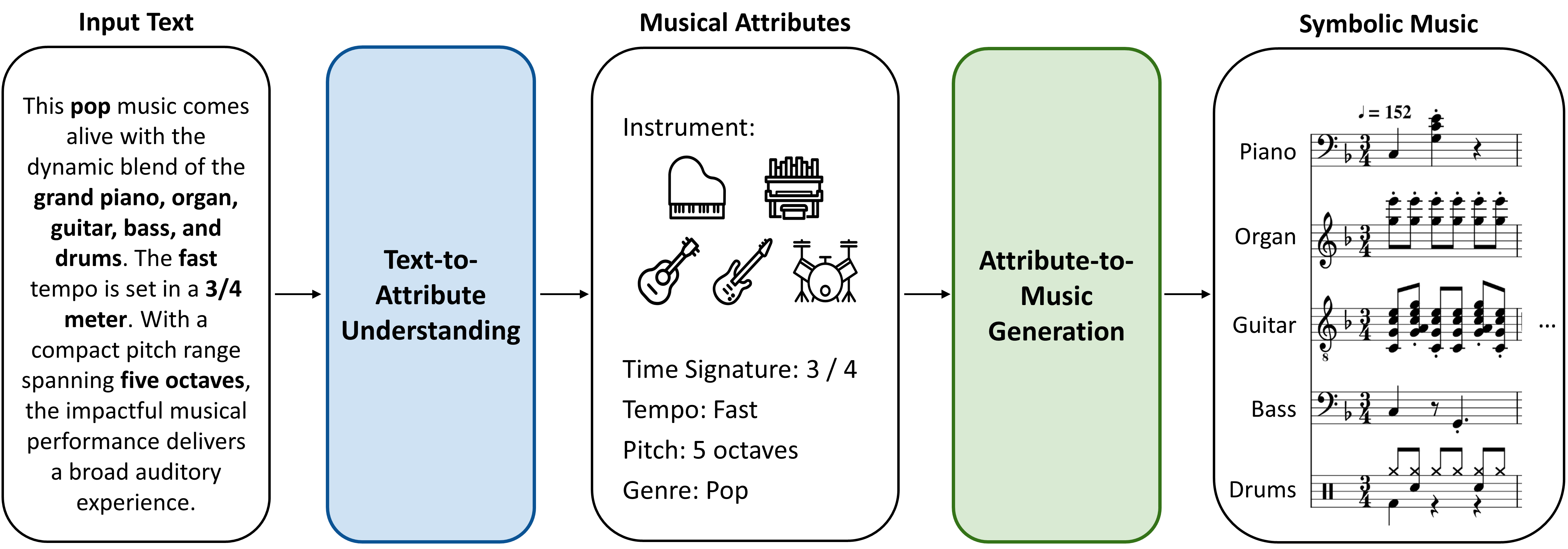

Figure 1: The two-stage framework of MuseCoco. Text-to-attribute understanding extracts diverse musical attributes, based on which symbolic music is generated through the attribute-to-music generation stage.

Please note: this work focuses on generating symbolic music, which do not contain timbre information. We use MuseScore (https://musescore.org/en) to export mp3 files for reference, musicians are encouraged to leverage the .mid files for further timbre improvement.

Comparison with Baselines

Group 1

| BART | GPT-4 | MuseCoco |

|---|---|---|

Comments:

BART-base: The generated sample from BART demonstrates inadequate control over attributes such as time signature, duration, and octaves. Furthermore, the sample's musicality suffers due to a lack of harmonies, resulting in an incomplete composition.

GPT-4: The generated music from GPT-4 is controlled well. However, it tends to sound monotonous since the rhythm patterns lack of variations, which is common in most generated music from GPT-4. Furthermore, some counterpoints in the composition, specifically in the 4th and 10th bars, lack well-designed integration, detracting from the overall quality of the piece.

MuseCoco: The generated music from MuseCoco is controlled precisely. Besides, it has more complex harmonies and more diverse rhythm patterns for a better listening experience.

Group 2

| BART | GPT-4 | MuseCoco |

|---|---|---|

Comments:

BART-base: The generated music solely relies on the piano, which deviates from the consistent instrumentation described in the text. In terms of musicality, the rhythm lacks fluency, with many unexpected rests that disrupt the listening experience.

GPT-4: While the generated music exhibits precise control, the overall musicality falls short of expectations. The drum pattern deviates from the usual rhythmic sensibility, failing to provide a satisfying rhythmic foundation. Additionally, the note pitches used for the bass are not suitable, resulting in a lack of a cohesive bass line, which is crucial for a complete and well-rounded composition.

MuseCoco: The generated music demonstrates precise control. The piano effectively carries the melody, while the bass provides a solid foundation with its bass line, enhancing the depth of the composition. The addition of the drums in the middle adds various rhythmic patterns, further enriching the overall musical texture.

Group 3

| BART | GPT-4 | MuseCoco |

|---|---|---|

Comments:

BART-base: The generated music suffers from inaccuracies in its control of time signature, duration, octaves, and note density. Furthermore, the melody line lacks variation, resulting in a monotonous composition with repetitive rhythm patterns and harmonic progressions.

GPT-4: The generated music lacks precision in controlling duration, octaves, and note density. As a result, the composition suffers from a monotonous melody characterized by simplistic rhythms and a lack of harmonies.

MuseCoco: The generated music demonstrates precise control, particularly notable in the dense arrangement of notes. This intricate composition style contributes to its memorability and adds an interesting and captivating quality to the music.

Group 4

| BART | GPT-4 | MuseCoco |

|---|---|---|

Comments:

BART-base: The generated music deviates from the attributes specified in the text description, such as time signature, duration, and octaves. Furthermore, the style of the music does not resemble that of Chopin. Additionally, the abnormal usage of rests within each voice part raises doubts about the composition's coherence and musicality.

GPT-4: The generated music falls short in multiple aspects. It does not span 5 octaves as specified, and it fails to capture the essence of Chopin's style. The melody lacks variation, resulting from limited rhythm patterns and harmonies. Additionally, the inclusion of two identical tracks introduces redundancy and detracts from the overall composition.

MuseCoco: The generated music demonstrates precise control. Specifically, the 5th and 6th bars skillfully capture the distinct essence and emotive qualities associated with Chopin's style, effectively conveying the desired musical expression.

Group 5

| BART | GPT-4 | MuseCoco |

|---|---|---|

Comments:

BART-base: The generated music is monophonic with the piano and lacks the bass, voice, flute and drum. And it has the wrong time signature of 6/8.

GPT-4: The generated music is in the incorrect pitch range (1 octaves) and tempo (very slow). And its melody is monotonous and repeated.

MuseCoco: The generated music from MuseCoco is controlled precisely. The moderato chanting presents a peaceful religious style.

Group 6

| BART | GPT-4 | MuseCoco |

|---|---|---|

Comments:

BART-base: The generated music lasts too long with a monophonic melody and does not resemble the style of Brahms.

GPT-4: The tempo of generated music from GPT-4 is too slow and the pitch ranges less in one octave, which are not corresponding to the text. And the melody is too simple to relate to Brahms.

MuseCoco: The generated music demonstrates precise control. Specifically, it is of the 4/4 time signature, in minor and with a fast tempo. The development of the multi-layed piano shows the Brahms' style.

Group 7

| BART | GPT-4 | MuseCoco |

|---|---|---|

Comments:

BART-base: The generated music is too long to be corresponding with the text. And it consists of discrete notes and a boring melody, which can not express the classical style of Schubert.

GPT-4: The pitch range of generated music is limited in only one octave. It simple melody lacks the classical style from Schubert.

MuseCoco: The generated music is controlled perfectly. It utilizes the piano with a moderate tempo in major, which presents a relaxed style of the 3/4 meter like the Schubert's minuetto.

Generation Diversity

We test the generation diversity with the same text conditions.Sample 1

Text Description:

Music is representative of the typical pop sound and spans 13 ~ 16 bars, this is a song that has a bright feeling from the beginning to the end. This song has a very fast and lively rhythm. The use of a specific pitch range of 3 octaves creates a cohesive and unified sound throughout the musical piece.

Generated Samples:

Sample 2

Text Description:

3/4 is the time signature of the music. This song is unmistakably classical in style. This music is low-tempo. The use of piano is vital to the music.

Generated Samples:

Sample 3

Text Description:

The flute creates a lively and upbeat atmosphere in this fast-paced song with just the right tempo, making it perfect for a tropical setting. The time signature of this song is not usual. The music is enriched by oboe, trumpet, flute and tuba.

Generated Samples:

Sample 4

Text Description:

The song's upbeat melody and swift pace, paired with its 3 octave pitch range and 3/4 time signature, offers a diverse and dynamic listening experience that suits a bustling cityscape. Strings, drum, clarinet and flute are utilized in the musical performance. The song's length is around about 14 bars.

Generated Samples:

Sample 5

Text Description:

This song has a steady and moderate rhythm. The music is given its sound through saxophone, drum and trombone. You can hear about 14 bars in this song. 2/4 is the time signature of the music.

Generated Samples:

Controlled by Specific Attributes

The model can also take the specific attribute values rather than text descriptions as the conditions to generate the corresponding music.| Attribute Value | Generated Samples |

|---|---|

|

Rhythm Intensity: moderate Bar: 16 Time Signature: 4/4 Tempo: moderato Time: 30-45s | |

|

Instrument: violin Rhythm Intensity: intense Tempo: slow Time: >60s Artist Style: Bach | |

|

Instrument: piano drum bass Tempo: moderato |